Understanding AI – How do Transformer models and GPT work?

20. March 2024 by [lc_the_author_full_name]

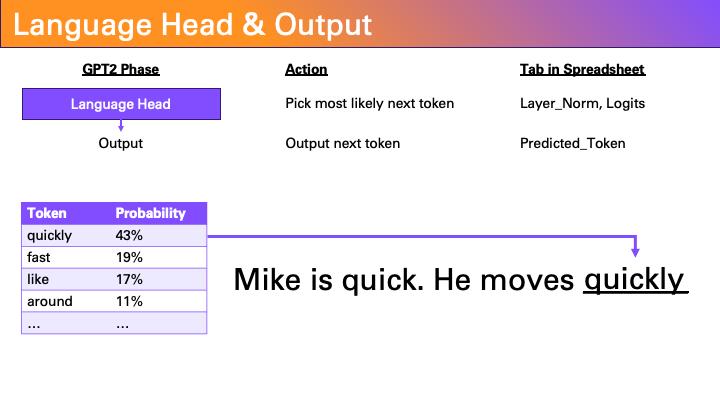

This video explains how Transformers work in simple steps and uses Excel functions to show the structure of OpenAI’s GPT2 architecture.

The underlying table can be downloaded here and tried out for yourself (download section).

The implementation of spreadsheets are all you need serves to understand how they work and only works with little load and corresponding limitations:

- Full GPT2 small (124M parameters) model including byte pair encoding, embeddings, multi-headed attention, and multi-layer perceptron stages

- Inference/forward pass only (no training)

- Context is limited to 10 tokens in length

- 10 characters per word limit

- Zero temperature output only

A nice way to get into the topic and gain more understanding.

Trained Translation Models

in corporate language. Mammothly strong.

Continuously learning AI models for real-time translations

in professional quality.

Unlimited number of users

Easy integration into editorial systemsn

High availability of services

Data Protection & Data Security

Trained on the corporate language